Your robot lawyer will see you now: two AIs negotiated a contract for the first time – without a human involved

Cold, calculating and robotic: lawyers of the future may live up to their exaggerated reputation as AI takes over the legal profession.

For the first time, two AIs created by law firm Luminance have successfully negotiated a contract without any human intervention.

The AIs went back and forth over the details of a real non-disclosure agreement between the company and proSapient, one of Luminance’s customers.

The contract was completed within minutes and the only time a human was needed was to sign.

This stunning demonstration comes just a week after Elon Musk predicted that AI would eventually create a jobless utopia where no one has to work.

In the future, AI could completely replace human lawyers after the first contract was successfully negotiated by two AIs, without any human intervention at any stage

Speaking to Prime Minister Rishi Sunak at the Bletchley Park AI Summit, Musk said AI would be the most disruptive force in the history of labor and would ultimately eliminate the need for people to have jobs.

“There will come a point where a job is no longer needed,” Musk said at the summit.

“You can have a job if you want to have a job for personal satisfaction, but the AI can do anything.”

While automation has historically replaced physical repetitive labor, Luminance’s legal AI shows that even lawyers and other intellectual professions are not safe.

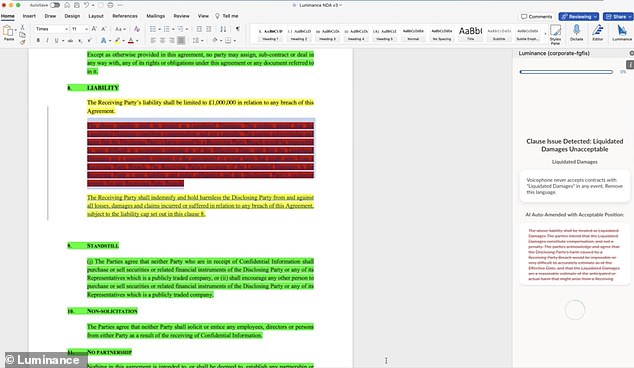

At the event in Luminance’s London office, the AIs independently read and analyzed the contract before proposing changes and making changes.

Trained on a dataset of more than 150 million legal documents, the AI can understand areas of legal risk and learn from previous contracts.

For example, the non-disclosure agreement originally suggested a six-year contract.

But Luminance’s AI recognized that this was against company policy and automatically rewrote it to a three-year term instead.

Luminance’s legal AI automatically flags “red flags” in contracts and suggests changes that better fit company policy

Nick Emmerson, president of the Law Society, told MailOnline: ‘At this time and probably for the foreseeable future, AI will not be able to fully replace the function of legal expertise held by legally qualified professionals.’

He explained that this is because customers “have different needs and vulnerabilities that a machine cannot yet master and human judgment is needed to ensure that automated decisions do not result in potential false positives.”

“There is also an art to negotiation and ultimate bargaining that AI is unlikely to master,” he added.

Jaeger Glucina, chief of staff and MD at Luminance, agrees that the technology is not intended to replace lawyers, but rather to support them to work better.

“AI represents a new, more efficient chapter in the future of legal work and one that could change the game forever,” she said.

‘By putting the daily negotiations in the hands of an AI that is legally trained and understands your business, we give lawyers the freedom to focus their creativity where it counts.’

With legal teams spending up to 80 percent of their time reviewing routine documents, Luminance says these tools will free lawyers to focus on more important issues.

A week after Musk’s prediction that AI would eliminate the need for work, this development shows that even intellectual work such as legal counsel is not safe from automation

Contract negotiations, and especially non-disclosure agreements, can be an extremely time-consuming process because the contract must go back and forth between different teams.

Connagh McCormick, general counsel of proSapient, says the new technology will “accelerate the negotiation process, accelerate time to signing and ultimately increase company-wide efficiency.”

Although the process itself is fully automated, the AI records the changes made at each stage, allowing a human to oversee the entire process.

Just as AI has been implemented for computer programmers, Lumina envisions that the AI will function as a “co-pilot” for lawyers rather than operating independently.

Integrating AI into a legal environment is particularly challenging due to the high risks associated with an error.

Mr Emmerson also warns that there is a risk that legal responsibility for errors will become unclear as AI becomes more widely used.

Earlier this year, a New York City attorney was thrown out of court after admitting that he used false information from ChatGPT in his investigation.

Steven Schwartz submitted a ten-page letter detailing at least six completely fictional cases.

The world’s first robot lawyer also found himself in legal trouble after being sued for not having a law degree.

AI-powered app DoNotPay is facing accusations that it is “disguising itself as a licensed practitioner” in a class action lawsuit filed by US law firm Edelson.

However, DoNotPay’s founder Joshua Browder says the claims have “no merit whatsoever.”