This AI can take your bad sketch and make it art, right on your phone

Local and mobile AI image generation could be the wave of the future.

Most of us have by now dabbled a bit in generative image creation. We enter a weird little prompt into a text field and an online platform like Stable Diffusion, Midjourney, or DALL-E spits out something beautiful, bizarre, or both. One thing all those platforms have in common though is the need for an online connection. What if there was a way to do similar – maybe better – Generative AI image creation with just the phone in your hand and no connection to the Internet or Cloud at all? Qualcomm thinks it has a solution in the not-at-all-scarily named ControlNet.

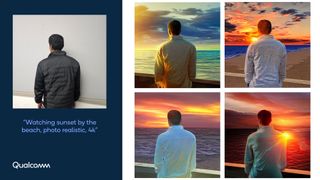

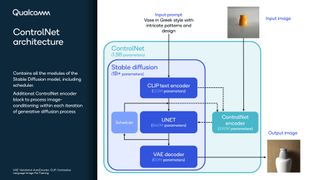

Unveiled this week at the Computer Vision and Pattern Recognition Conference (CVPR) in Vancouver, Canada, ControlNet is a new mobile AI image generation model that has two tantalizing core benefits: 1) The model is local, so ControlNet can work on almost any platform without the need for an online connection. 2) Instead of using just text to generate an AI image, ControlNet starts with a seed image you provide and then manipulates it based on a text prompt.

In some ways, this is similar to Adobe’s Firefly AI, which can generate AI portions to enhance existing images. However, that model, too, needs an online connection to work.

The introduction of this open-source model, which is, in part based on Stable Diffusion but adds an extra half a billion parameters to the model’s existing 1 billion, and can be freely used by third-party companies, is not pure altruism.

Sure, ControlNet could conceivably run on Windows, Mac, iOS, and Android, but it won’t be nearly as fast unless it’s running on Qualcomm’s Snapdragon platform and, in particular, the Hexagon digital signal processor (DSP) on the Snapdragon 8 Gen 2 mobile processor like the one in the Samsung Galaxy S23 Ultra.

In demos I saw, ControlNet was able to transform a dull office space image into a 1970s-themed complete with orange walls, and then transform Barcelona’s streets into flowing canals. The office image was stunning for its fidelity. The Barcelona one looked like the work of a fevered Van Gogh.

ControlNet does its work by taking the basic shapes and structures it finds in images and drawing around them, Still the speed and quality of the output mean that third-party hardware and software developers will surely take interest. Especially because of the obvious benefits of local computing (something Apple famously already favors for much of its AI work).

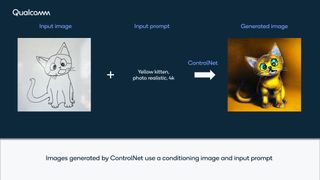

ControlNet doesn’t necessarily need full-formed photos to generate new or altered images. Even a rough sketch and a text prompt can produce something interesting and maybe useful. In one Qualcomm-supplied demo image, they show a rough sketch of a kitten turned into a surrealist cat that still, somehow, resembles the original drawing.

With local AI generation, your original seed image is not pipped back up to the cloud, nor is the prompt shared with any third parties or stored in far-flung servers. It is, as most privacy advocates would prefer, a closed loop.

Qualcomm is rolling out the ControlNet SDKs to developers who want to start programming and testing on Hexagon. As for who might unveil ControlNet-based products in the future, that’s hard to say. Qualcomm won’t because it does not sell anything directly to consumers.

Longtime partner Samsung, though, is a real possibility. Imagine the Samsung Galaxy S24 or S25 Ultra with a native ControlNet-based app. Or, perhaps, Samsung builds it right into its photo or camera app. For what it’s worth, the demo I saw was running on a Samsung Galaxy S23 Ultra.