‘Terrifying’ new Sora AI videos reveal how ANYONE will be able to create astonishingly realistic footage using text commands – amid fears technology threatens to wipe out industries like Hollywood and poses a grave threat to democracy

Open AI, the company behind ChatGPT, has unveiled a ‘scary’ new tool, Sora, that can produce hyper-realistic videos from text, prompting warnings from experts.

Sora, unveiled Thursday by Open AI, demonstrates powerful examples such as drone footage of Tokyo in the snow, the waves crashing against the cliffs of Big Sur or a grandmother enjoying a birthday party.

Experts have warned that the new artificial intelligence tool could wipe out entire industries such as film production and lead to a surge in deep fake videos in the run-up to the crucial US presidential election.

“Generative AI tools are evolving so quickly and we have social networks, which is creating an Achilles heel in our democracy and it couldn’t have happened at a worse time,” Oren Etzioni, founder of TruMedia.org, told CBS.

“As we try to resolve this, we are faced with one of the most consequential elections in history,” he added.

Open AI’s Sora tool created this video of golden retriever puppies playing in the snow

The tool was prompted with “drone view of waves crashing against the rugged cliffs along Big Sur’s Garay Point Beach” to create this hyper-realistic video

Another AI-generated video of Tokyo in the snow has shocked experts with its realism

The quality of AI-generated images, audio and video has increased rapidly over the past year, with companies like OpenAI, Google, Meta and Stable Diffusion racing to create more advanced and accessible tools.

“Sora can generate complex scenes with multiple characters, specific types of movements, and precise details of the subject and background,” OpenAI explains on its website.

“The model understands not only what the user is asking for in the prompt, but also how those things exist in the physical world.”

The tool is currently being tested and evaluated for potential security risks, but no public release date is yet available.

The company has revealed examples that are unlikely to offend, but experts warn the new technology could unleash a new wave of extremely lifelike deepfakes.

“We’re trying to build this plane as we fly it, and it will land in November if not sooner, and we don’t have the Federal Aviation Administration, we don’t have the history and we don’t have the resources to do this,” Etzioni warned.

Sora “will make it even easier for malicious actors to generate high-quality video deepfakes, and give them more flexibility to create videos that can be used for offensive purposes,” says Dr. Andrew Newell, chief scientific officer of identity verification company iProov. told CBS.

“Voice actors or people who create short videos for video games, educational purposes or advertising will be most directly affected,” Newell warned.

Deep fake videos, including of a sexual nature, are becoming an increasing problem, both for private individuals and for people with a public profile.

The company has unveiled examples that are unlikely to offend, but experts warn the new technology could unleash a new wave of extremely lifelike deepfakes

Sora was tasked with ‘a drone camera circling a beautiful historic church built on a rocky outcrop along the Amalfi Coast’ to create this video

The Technological Interpretation of: ‘A Chinese New Year Celebration Video with Chinese Dragon’

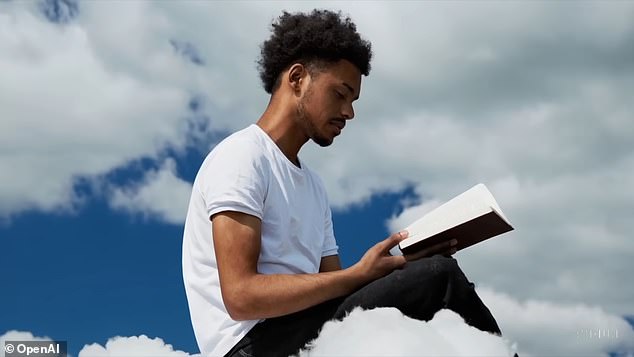

‘A young man in his twenties sits on a cloud in the sky reading a book’

‘Look where we have come in a year of image generation. Where will we be in a year?’ Michael Gracey, a film director and visual effects expert, told the Washington Post.

“We will take several important security steps before making Sora available in OpenAI products,” the company wrote.

“We are working with red teamers – domain experts in areas such as disinformation, hateful content and bias – who will test the model in an adversarial manner.

Adding, “We’re also building tools to help detect misleading content, such as a detection classifier that can tell when a video was generated by Sora.”

Deep fake images gained extra attention earlier this year when AI generated sexual images of Taylor Swift that circulated on social media.

The images are from the website Celeb Jihad, which features Swift performing a series of sexual acts while clothed Kansas City Chief memorabilia and in the stadium.

The star was left ‘furious’ and considering legal action.

Nvidia, which makes computer chips used in AI technology, has seen its value explode. At one point last week, Nvidia closed at $781.28 per share, giving it a market cap of $1.78 trillion.

On Friday, several major tech companies signed a pact to take “reasonable precautions” to prevent artificial intelligence tools from being used to disrupt democratic elections around the world.

Executives from Adobe, Amazon, Google, IBM, Meta, Microsoft, OpenAI and TikTok have pledged to take preventative measures.

“Everyone recognizes that no technology company, no government, no civil society organization is capable of addressing the advent of this technology and its potentially nefarious uses on its own,” said Nick Clegg, president of global affairs for Meta na the signature.

It comes as Nvidia, which makes computer chips used in AI technology, has seen its value explode. At one point last week, Nvidia closed at $781.28 per share, giving it a market cap of $1.78 trillion.

That’s higher than Amazon’s market cap of $1.75 trillion.

It was the first time since 2002 that Nvidia increased in value after the market closed CNBC.

The California-based company has seen its shares rise 246 percent in the past twelve months as demand for its AI server chips grows. Those chips could cost more than $20,000 per share.