Robots could become much better learners thanks to the groundbreaking method developed by Dyson-backed research. Removing the traditional complexity of teaching robots how to perform tasks will make them even more human

One of the biggest hurdles in teaching robots new skills is how to convert complex, high-dimensional data, such as images from onboard RGB cameras, into actions that achieve specific goals. Existing methods typically rely on 3D representations that require accurate depth information, or use hierarchical predictions that work with motion planners or discrete policies.

Researchers from Imperial College London and the Dyson Robot Learning Lab have unveiled a new approach that could tackle this problem. The “Render and Diffuse” (R&D) method aims to bridge the gap between high-dimensional observations and low-level robot actions, especially when data is scarce.

R&D, described in an article published on the arXiv preprint server, tackles the problem by using virtual representations of a 3D model of the robot. By displaying low-level actions within the observation space, researchers were able to simplify the learning process.

Visualize their actions in an image

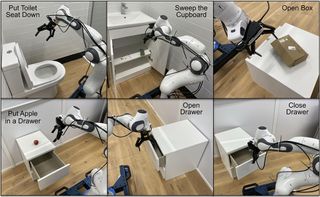

One of the visualizations to which researchers applied the technique was to have the robots do something that human men find impossible – at least according to women –: lower a toilet seat. The challenge is to take a high-dimensional observation (seeing the toilet seat is up) and combine it with a low-level robotic action (putting the seat down).

TechXplore explains: “Unlike most robotic systems, when learning new manual skills, humans do not perform extensive calculations to determine how much to move their limbs. Instead, they typically try to imagine how their hands should move to effectively tackle a specific task.”

Vitalis Vosylius, final year Ph.D. student at Imperial College London and lead author of the paper said: “Our method, Render and Diffuse, enables robots to do something similar: ‘represent’ their actions within the image using virtual representations of their own embodiment. Robot actions and display observations together because RGB images allow us to teach robots different tasks with fewer demonstrations and with improved spatial generalization capabilities.”

An important part of R&D is the learned dissemination process. This iteratively refines the virtual representations, updating the robot’s configuration until its actions closely match the training data.

The researchers conducted extensive evaluations, testing different R&D variants in simulated environments and on six real-world tasks, including removing a lid from a pan, placing a phone on a charging station, opening a box and sliding a a block to a goal. The results were promising and as research continues, this approach could become a cornerstone in the development of smarter, more adaptable robots for everyday tasks.

“The ability to represent robot actions in images opens up exciting possibilities for future research,” Vosylius said. “I’m especially excited about combining this approach with powerful image-base models trained on massive Internet data. This could allow robots to leverage the general knowledge captured by these models, while still being able to reason about low-level robot actions .”