New York lawyer used Chat GPT to research cases and used a fictitious decision to appeal her client’s lawsuit claiming a Queens doctor botched an abortion

- New York attorney Jae Lee cited a fabricated case about a Queens doctor who bungled an abortion in a call to revive her client’s lawsuit

- Lee said the case was “suggested” by ChatGPT, but there was “no bad faith, intent or prejudice against the opposing party or the legal system.”

- She has now been referred to the Complaints Committee of the Court of Appeal

A New York attorney is facing disciplinary proceedings after being caught using ChatGPT for research and citing a non-existent case in a medical malpractice lawsuit.

Attorney Jae Lee was referred to the 2nd U.S. Circuit Court of Appeals’ grievance panel on Tuesday after citing a fabricated case about a Queens doctor who bungled an abortion in an appeal to revive her client’s lawsuit.

The case did not exist and was called by OpenAI’s ChatGPT and the case was dismissed.

The court ordered Lee to submit a copy of the cited decision because it could not find the case.

She replied that she ‘could not provide a copy of the decision’.

New York attorney Jae Lee cited a fabricated case about a Queens doctor who bungled an abortion in a call to revive her client’s lawsuit

Lee said she included a case “suggested” by ChatGPT, but that there was “no evidence of bad faith, deliberateness or bias against the opposing party or the legal system.”

The conduct “falls well within the basic duties of lawyers,” a three-judge panel of the Manhattan-based appeals court wrote.

Lee, an attorney at the three-lawyer law firm JSL Law Offices in New York, said the court’s disciplinary referral was a surprise and that she is “committed to adhering to the highest professional standards and to addressing this matter with the seriousness it deserves’.

The 2nd Circuit has now referred Lee to the district court’s grievance panel for “further investigation.”

The court also ordered the lawyer to provide a copy of the ruling to her client.

This isn’t the first case of generative AI “hallucinating” lawsuits, with the tool producing information that is compelling but incorrect.

In June, two New York attorneys were fined $5,000 after relying on bogus research from ChatGPT for a filing in a personal injury claim against airline Avianca.

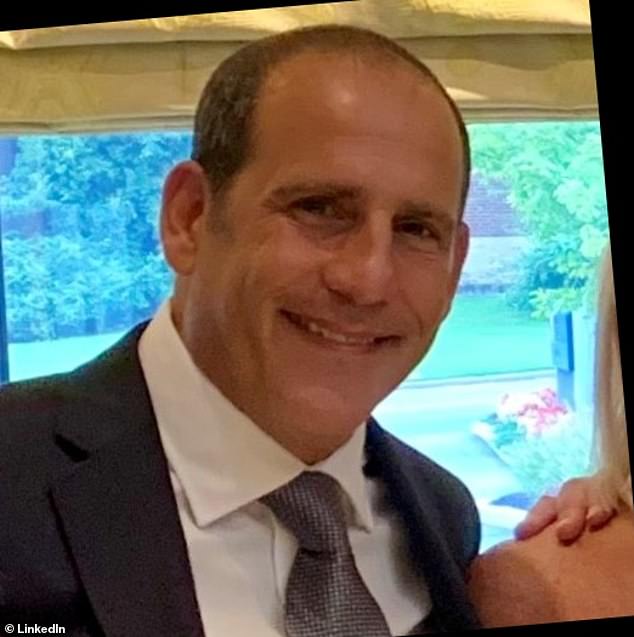

Judge Kevin Castel said attorneys Steven Schwartz and Peter LoDuca acted in bad faith by using the AI bot’s submissions — some of which contained “gibberish” — even after court orders questioned their authenticity.

Steven Schwartz submitted a 10-page letter containing six relevant court decisions that were found to have been fabricated by ChatGPT

Colombian airline Avianca had asked the judge to dismiss the claim against it because the statute of limitations had expired – and that’s when its lawyers intervened with what they thought were relevant comments made by ChatGPT.

Judge Kevin Castel said there is nothing wrong with using ChatGPT to argue a case, but attorneys are still responsible for ensuring the accuracy of their submissions

Schwartz and LoDuca represented Roberto Mata, who claimed his knee was injured when he was struck by a metal serving cart on an Avianca flight from El Salvador to New York’s Kennedy International Airport in 2019.

When the Colombian airline asked a Manhattan judge to dismiss the case because the statute of limitations had expired, Schwartz filed a 10-page legal brief with half a dozen relevant court rulings.

But six cases cited in the filing — including Martinez v. Delta Air Lines, Zicherman v. Korean Air Lines and Varghese v. China Southern Airlines — did not exist.

The judge concluded that Schwartz and LoDuca were responsible for ensuring the “accuracy” of all submissions, even if created with the help of artificial intelligence.

“Technological advances are commonplace and there is nothing inherently inappropriate about using a reliable artificial intelligence tool,” Castel wrote.

“But existing rules impose a gatekeeper role on lawyers to ensure the accuracy of their files,” he explained.