Microsoft CEO Satya Nadella says rise of AI deepfake porn that targeted Taylor Swift is ‘alarming and terrible’

Microsoft’s CEO has called the sexually explicit AI images of Taylor Swift that circulated this week “horrible” and “alarming,” and said more must be done to unite “justice and law enforcement and technology platforms.”

Satya Nadella will appear on NBC Nightly News with Lester Holt on Tuesday, and according to excerpts from their interview released by the network, the footage — believed to be captured using Microsoft tools — is disturbing.

According to excerpts, he has not promised any concrete change in Microsoft policy.

Nadella said Microsoft and other tech companies have a responsibility to “install all the guardrails that we need to put around the technology so that safer content is produced.”

Microsoft CEO Satya Nadella spoke with NBC News anchor Lester Holt. The interview will be broadcast on Tuesday

And he said there needs to be more agreement about what is acceptable, and more cooperation.

“We can do that — especially when you have law and law enforcement and technology platforms that can come together — I think we can govern a lot more than we think, which we give ourselves credit for.”

Pressured about what Microsoft planned to do, Nadella dodged the question.

“I think, first of all, this is absolutely alarming and terrible, and that’s why we need to take action,” he said.

“And honestly, all of us on the technology platform, regardless of what your position is on a particular issue. I think we all benefit from the online world being a safe world.

‘And so I don’t think anyone wants an online world that is completely unsafe, both for content creators and for content consumers.

“So that’s why I think it behooves us to get started on this quickly.”

The full interview will be broadcast on Tuesday evening.

Swift, 34, is said to be deeply disturbed by the images, and members of Congress have renewed their calls to criminalize the sharing of pornographic, non-consensual deepfakes.

The images were first noticed on Wednesday and spread quickly. They were viewed 45 million times and reposted 24,000 times on X before being deleted 19 hours later, The Verge reported.

On Thursday, technical website 404 Media discovered that the images came from a Telegram group, dedicated to creating non-consensual AI-generated sexual images of women.

AI-generated explicit images of Taylor Swift were posted on the Celeb Jihad website, which was previously warned by the singer’s lawyers after he shared another doctored image in 2011. Pictured: Swift performing at the Eras Tour in Sao Paulo, Brazil, on November 24, 2023

The lewd images are themed around Swift’s fandom of the Kansas City Chiefs, which started after she started dating star player Travis Kelce

The images were generated by members of a Telegram chat group, who used Microsoft programs and shared solutions to get around Microsoft’s rules

Members of the group were annoyed by the attention the Swift images drew to their work, 404 Media reported.

“I don’t know whether to feel flattered or angry that some of these photos stolen by Twitter are of me,” said one user in the Telegram group, according to the site.

Another complained: “Which one of you mfs here takes shit and throws it on Twitter?”

A third replied: “If there’s any way to shut down and rob this motherfucker they’re such idiots.”

The images were not classic “deepfakes,” 404 Media reported, in which Swift’s face was superimposed over someone else’s body.

Instead, they are created entirely by AI, with members of the group recommending Microsoft’s AI image generator, Designer.

Microsoft does not allow users to generate an image of a person by entering the “Taylor Swift” commands.

But users of the Telegram group would suggest solutions, prompting Designer to create images of “Taylor ‘singer’ Swift.”

Swift pictured leaving Nobu restaurant after dining with Brittany Mahomes, wife of Kansas City Chiefs quarterback Patrick Mahomes, on Jan. 23

And instead of instructing the program to create sexual poses, users entered prompts with enough objects, colors and compositions to create the desired effect.

The Pennsylvania-born billionaire was one of the first people to fall victim to deepfake porn in 2017.

The news site reported that she was also one of the first targets of DeepNude, which generated nude images from a single photo: the app has since been removed.

Swift’s unpleasant situation has renewed the push among politicians for stricter laws.

Joe Morelle, a Democratic member of the House of Representatives representing New York, introduced a bill in May 2023 that would criminalize non-consensual sexually explicit deepfakes at the federal level.

On Thursday, he wrote on X: “Another example of the destruction deepfakes cause.”

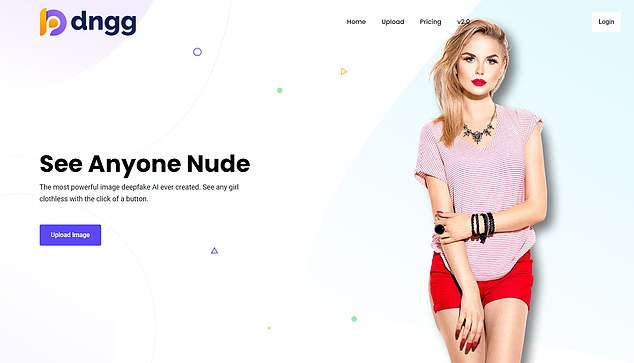

A website called ‘DeepNude’ claims that with the click of a button you can “see any girl without clothes (sic).” A ‘standard’ package on ‘DeepNude’ lets you generate 100 images per month for $29.95, while $99 gets you a ‘premium’ of 420.

His fellow New Yorker, Rep. Yvette Clarke, also called for action.

“What happened to Taylor Swift is nothing new. Women have been targeted by deepfakes without their consent for years. And advances in AI have made creating deepfakes easier and cheaper,” she said.

“This is a problem on both sides of the aisle and even Swifties should be able to solve it together.”

Swift has not commented on the incident, and neither has X owner Elon Musk.

As of April 2023, the platform’s manipulated media policy bans media “that depicts a real person (who) has been fabricated or simulated, especially through the use of artificial intelligence algorithms.”

However, footage has often slipped through the net, and the situation worsened when Musk took over in October 2022 and gutted the moderation team.