I Was Sexually Assaulted Online at 12 – Here’s What I Experienced and What Parents and Kids Need to Know

A victim of online grooming warns parents and children about the dangers of the Internet.

Harrison Haynes was 12 years old when he befriended a ‘teenager’ while playing video games online, where he was exposed to pornographic content, self-harm videos and manipulative messages.

The “friendship” led Harrison, now 20, down a dark path of isolation, secrecy and shame for more than a year.

Now Haynes is telling his story to show that dangerous people don’t sit in white vans handing out candy, but “come out of our iPhones.”

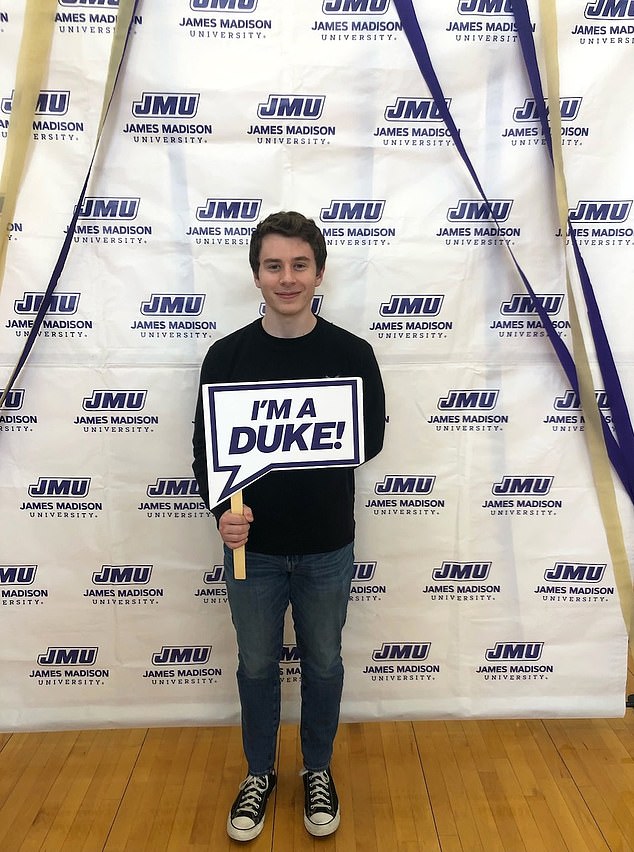

Above is Harrison Haynes as a 12-year-old. At the time, Haynes thought he had found a “best friend” when he began chatting with a stranger he met while playing video games online. But the stranger soon began exposing him to pornography and videos of self-harm

Above, Haynes attends a protest today at Apple headquarters in Cupertino, California. A 20-year-old college student, Haynes has come forward to tell his story in an effort to pressure Apple to work harder to implement better child protection features in its tech products.

“I think almost every generation in America right now is being told that there’s a stranger in a white van giving you candy, and you have to say no to that stranger,” Haynes told ABC’s Good morning America on tuesday.

“But I think for us and for my generation, the danger is not the stranger in the white van. That’s not where the call comes from. The call comes from our pockets. It comes from our iPhones.”

Haynes’ story begins when he was just a kid who made few friends and discovered that gaming was a way to relieve loneliness.

And then Haynes came into contact with the ’19-year-old’ who he said would sexually abuse him.

“I don’t think anyone in the world has mastered the language of grooming yet,” Haynes said of his ordeal.

The relationship started on a gaming platform, but once the “teen” got a hold of Haynes, they moved the connection to iMessage.

“When we switched to iMessage, there was no way to report him,” Haynes said of the encounter. “For him, he was safe on iMessage.”

Through Apple’s messaging service, the unknown Haynes was able to send content containing self-harm and pornography.

And the stranger began bombarding the child at school and while he was with his family.

Haynes explained that over time he felt “trapped against a wall” as what started as a few innocent messages a week spiraled into abusive and inappropriate messages four to five times a day — which he couldn’t block on his iPhone.

He told GMA that this dark and personal suffering reached a climax when his virtual abuser threatened to commit suicide if he didn’t continue to comply with his demands.

In response to his abuser’s manipulative suicide threat, Haynes said he “cried so hard I woke up my parents down the hall.”

When his parents rushed inside and began searching through his phone, learning for the first time about the abusive relationship their son was having with this virtual stranger, Haynes said he was surprised to find that his fears of shame and punishment had been unfounded.

“They didn’t seem angry with me like I thought they would be,” Haynes recalled. “They sat me down and told me I was being manipulated in some way.

“When (…) he started exposing me to photos and videos of self-harm and internet porn,” he added, “I thought I couldn’t have any more contact with an adult.”

Today, Haynes (above) is a student and child safety activist at James Mason University

Haynes, now a student at James Madison University, has joined the nonprofit Heat Initiative.

The group is described as “a collaborative effort of concerned child safety experts and advocates encouraging leading technology companies to detect and eliminate child sexual abuse images and videos on their platforms.”

In June, Haynes joined the group’s protest outside Apple’s headquarters in Cupertino, California.

He and Heat initiative are calling on the tech giant to develop and introduce features that will allow parents, children and other stakeholders to “report inappropriate images and harmful situations.”

The group also wants Apple to make it harder for sex offenders to store and distribute known images and videos of child sexual abuse on Apple’s iCloud platform.

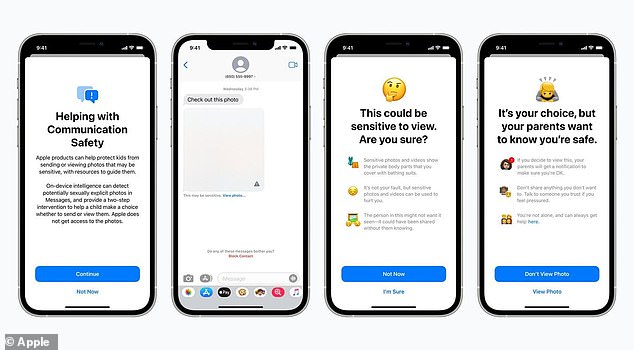

Apple pointed reporters to the child safety features the company has added to its operating system, iOS and apps over the past eight years.

The company said Good morning America that Apple devices have had additional communication safety features for minors since the release of iOS 15 in 2021.

One of those features is a warning when underage users try to send or receive images or videos containing nudity.

Above is another photo of Haynes as a 12-year-old around the time of the online abuse

According to the company, this feature will now be the default setting for child accounts under 13, following the release of iOS 17 in September 2023.

Apple’s efforts to identify and report child pornography on iCloud servers have proven more difficult and controversial, with privacy activists concerned about a large company monitoring all of its users’ data.

But Haynes also has a message for parents and children, who he says need to take action to be more careful about how they navigate digital spaces.

“Parents, I can’t stress this enough,” Haynes said, “don’t be afraid to talk to your kids about uncomfortable things.”

The child protection activist said he felt trapped by relying on his abuser as a lifeline to talk about his feelings, cope with bullying at school and discuss the typical challenges and questions of adolescence.

“There was this weird back and forth,” Haynes explained. “In my head I was like, I want to come out of this on my own, because now I’m hurting myself and now I’m consuming porn like a 12, 13-year-old boy.”

But at the same time, the false trust his predator had built in him carried a deep emotional burden.

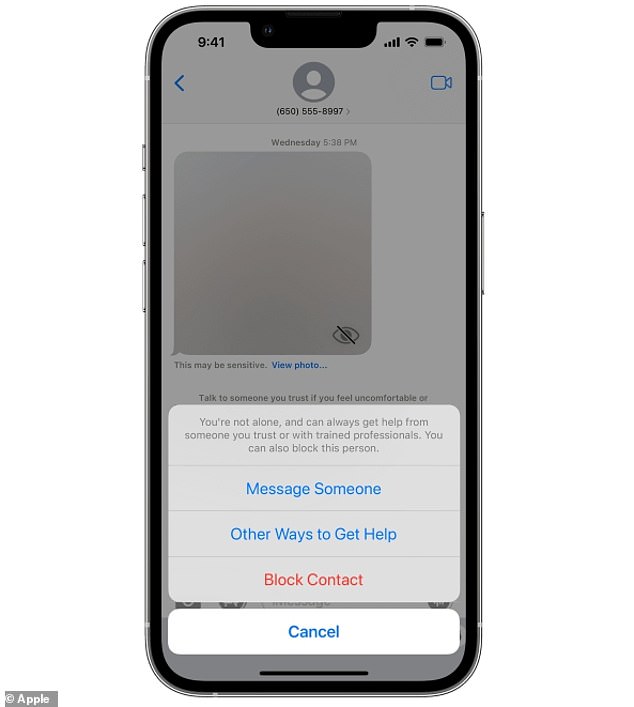

Apple launched its Communication Safety tool in the US in 2022. The tool, which parents can opt into or out of, scans images sent and received by children in Messages or iMessage for nudity and automatically blurs them.

If Apple’s iMessage app detects that a child has received or is trying to send a photo containing nudity, it will blur the photo and display a warning that the photo may be sensitive and offer ways to get help.

“He was someone I really cared about, and I knew if I asked for help I would be putting him in danger,” he said.

But another part of the problem, Haynes noted, was “the taboo and stigmatization” of the shocking images and videos he received on his iPhone.

“I didn’t feel like I could reach out to a principal, a counselor, a teacher, or my parents because I was afraid I would get into trouble,” Haynes explains.

“If parents can interact with their children in a place where they feel comfortable, which is in their own home,” the 20-year-old now believes, “I think we can have a much better future.”

“If I had had that conversation with my parents, I wouldn’t have had to seek comfort from a stranger on the internet.”