Heartbreaking moment Tesla owner almost crashes into a moving TRAIN in ‘self-drive’ mode (and he says it wasn’t the first time!)

A Tesla owner blames his vehicle’s Full Self-Driving feature for swerving into an oncoming train before he could intervene.

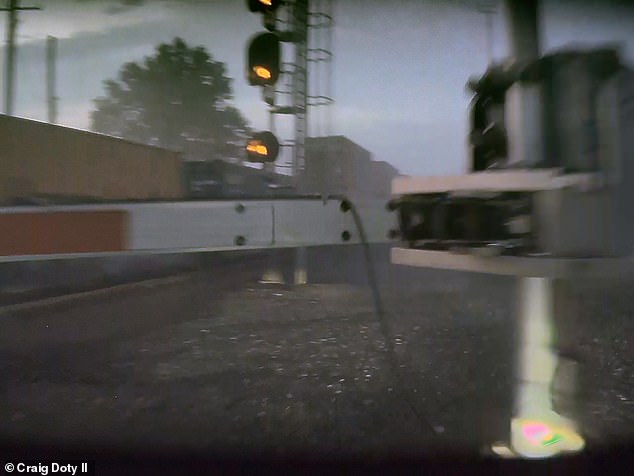

Craig Doty II, of Ohio, was driving along a road at night earlier this month when dashcam footage showed his Tesla quickly approaching a passing train without any sign of slowing down.

He claimed his vehicle was in Full Self-Driving (FSD) mode at the time and did not slow down despite the train crossing the road – but did not provide the make or model of the car.

In the video, it appears that the driver was forced to intervene by driving straight through the level crossing sign and coming to a stop just meters from the moving train.

Tesla has faced numerous lawsuits from owners claiming that the FSD or Autopilot feature caused them to crash because the car failed to stop for another vehicle or struck an object, in some cases costing the lives of the drivers involved were demanded.

The Tesla driver claimed his vehicle was in Full Self-Driving (FSD) mode but did not slow down as he approached a train crossing the road. In the video, the driver was reportedly forced to veer off the road to avoid a collision with the train. Pictured: The Tesla vehicle after the near collision

According to the National Highway Traffic Safety Administration (NHTSA), as of April 2024, Tesla’s Models Y,

Doty reported the issue on the Tesla Motors Club website, saying he has owned the car for less than a year, but “twice in the past six months he has attempted to drive directly into a passing train while in the FSD mode was.’

He had reportedly tried to report the incident and find similar cases to his, but was unable to find an attorney who would take his case because he suffered no significant injuries — just back pain and a bruise, Doty said.

DailyMail.com has reached out to Tesla and Doty for comment.

Tesla warnings drivers against using the FSD system in low light or poor weather conditions such as rain, snow, direct sunlight and fog, which “can significantly degrade performance.”

This is because conditions disrupt the operation of the Tesla sensor, including ultrasonic sensors that use high-frequency sound waves to reflect nearby objects.

It also uses radar systems that produce low frequencies of light to determine if a car is nearby, and 360-degree cameras.

These systems collectively collect data about the environment, including road conditions, traffic and nearby objects, but in low visibility conditions the systems are unable to accurately detect the conditions around them.

Craig Doty II reported that he had owned his Tesla vehicle for less than a year, but the full self-driving feature had already nearly caused two accidents. In the photo: the Tesla approaches the train, but does not slow down or stop

Tesla is facing numerous lawsuits from owners who claim the FSD or Autopilot feature caused them to crash. Pictured: Craig Doty II’s car veers off the road to avoid the train

When asked why he continued to use the FSD system after the first near-collision, Doty said he was confident it would work correctly because he had not had any other problems for a while.

“After you’ve used the FSD system for a while, you tend to trust that it’s working correctly, just as you would with adaptive cruise control,” he said.

‘You assume the vehicle will slow down as you approach a slower car in front of you, until it doesn’t, and you’re suddenly forced to take control.

“This complacency can build up over time as the system usually performs as expected, making these types of incidents particularly concerning.”

The Tesla manual does warn drivers against solely relying on the FSD feature, which tells them to keep their hands on the wheel at all times, ‘consider road conditions and surrounding traffic, look out for pedestrians and cyclists, and always be prepared to take immediate action to undertake.’

Tesla’s Autopilot system is accused of causing a fiery crash that killed a Colorado man as he drove home from the golf course, according to a lawsuit filed May 3.

Hans Von Ohain’s family claimed he was using the Autopilot system of the 2021 Tesla Model 3 on May 16, 2022, when it veered sharply to the right of the road, but Erik Rossiter, who was a passenger, said the driver was heavily intoxicated at the time of the collision. the crash.

Ohain attempted to regain control of the vehicle but was unable to do so and died when the car crashed into a tree and burst into flames.

An autopsy report later found he had three times the legal alcohol limit in his system when he died.

In 2019, a Florida man was also killed when the Tesla Model 3’s autopilot failed to brake when a semi-truck entered the road, causing the car to slide under the trailer – killing the man instantly.

In October last year, Tesla won its first lawsuit over allegations that its Autopilot feature led to the death of a Los Angeles man when the Model 3 veered off the highway and crashed into a palm tree before catching fire.

The NHTSA investigated the accidents linked to the Autopilot feature and said a “weak driver engagement system” contributed to the car wrecks.

The Autopilot feature “led to foreseeable misuse and avoidable crashes,” the NHTSA said report said, adding that the system “did not sufficiently ensure driver attention and proper use.”

Tesla released an over-the-air software update to two million vehicles in the US in December that was supposed to improve the Autopilot of the vehicle’s FSD systems, but the NHTSA has now suggested the update was probably not enough in light of more crashes.

Elon Musk did not comment on the NHTSA’s report, but previously praised the effectiveness of Tesla’s self-driving systems and claimed in 2021 after on

‘People are dying because of misplaced confidence in the capabilities of Tesla Autopilot. Even simple steps can improve safety,” said Philip Koopman, an automotive safety researcher and professor of computer engineering at Carnegie Mellon University. CNBC.

“Tesla could automatically limit Autopilot use to intended roads based on map data already in the vehicle,” he continued.

“Tesla could improve monitoring so drivers aren’t routinely immersed in their cell phones while Autopilot is in use.”