Fears that workplace issues could come to light as Slack leak gives hackers access to private channels

Hackers have developed a ‘hard to trace’ new method to abuse AI resources in the workplace messaging app Slack, tricking the chatbot into sending malware.

The popular collaboration platform has gained popularity by facilitating quick communication between colleagues, with some linking it to a new era of ‘micro-cheating’ and office affairs.

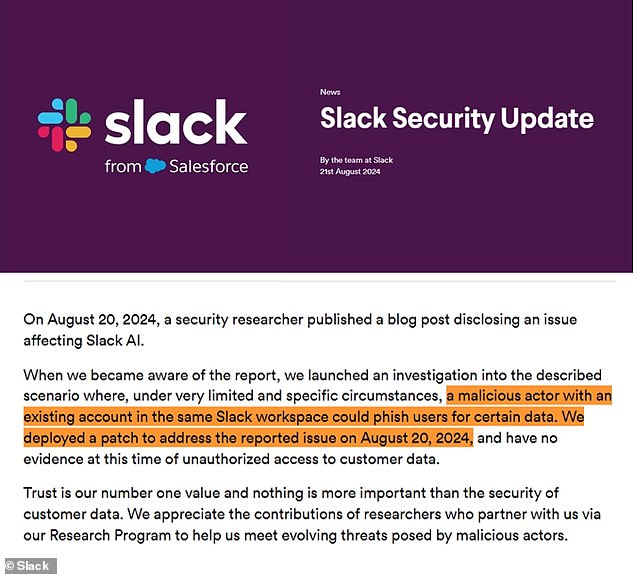

The cybersecurity team within Slack’s research program reported Tuesday that they had patched the issue on the same day that outside experts first reported the vulnerability to them.

But the vulnerability, which allows hackers to hide malicious code in uploaded documents and Google Drive files, highlights the growing risks of “artificial intelligence” that lacks the “street smarts” to deal with unwitting user requests.

While the independent security researcher who first discovered the new flaw praised Slack for its diligent response, they made the news of the AI vulnerability public “so users could disable the necessary settings to reduce their exposure.”

Slack’s AI vulnerability allowed hackers to hide their malware in uploaded documents and Google Drive files stored in the workplace collaboration app (pictured above)

Cybersecurity researchers at the firm PromptArmor, which specializes in finding vulnerabilities in AI software that uses a large language model (LLM), first discovered the issue after a recent update to Slack AI made the software more vulnerable to hidden malware.

‘After August 14, Slack will also process uploaded documents, Google Drive files, etc.’ PromptArmor “thereby increasing the risk surface,” they stated in their report.

Slack AI’s integration into the app’s employee communication tools made it particularly easy for attackers to disguise their attempts to steal private data as “error messages” asking users to re-authenticate their own access to the same data.

The new update allowed hackers to create a private or public chat channel in Slack as a snippet, allowing them to hide the origin of their malicious code.

“While Slack AI clearly captured the attacker’s message, it does not list the attacker’s message as a source for the output,” PromptArmor explains.

The result, as they put it, is: “If Slack AI receives an instruction via a message and that instruction is malicious, Slack AI is likely to follow that instruction instead of, or in addition to, the user’s request.”

Akhil Mittal, senior manager of cybersecurity strategy at Synopsys Software Integrity Group, says current AI tools have proven far too susceptible to these types of attacks because Silicon Valley has rushed its many competing AI offerings to market.

The cybersecurity team within Slack’s research program said Tuesday that they patched the issue on the same day external experts first reported the vulnerability to them (pictured)

“This really makes me question the security of our AI tools,” Mittal told a technology news site Dark reading.

“It’s not just about solving problems,” the cybersecurity expert said, “but about making sure these tools are managing our data appropriately.”

According to PromptArmor, the widespread use of Slack by multiple companies and organizations increases the risk of future mistakes of this type.

“The proliferation of public broadcasters is a major problem,” they noted.

“There are a lot of Slack channels and team members have no way to keep track of which channels they are members of, let alone monitor a (malicious) public channel created by just one member.”