CheatGPT! Examiners struggle to tell the difference between answers written by AI and those written by real human students. Can you tell which of these papers was written by a bot?

The art of cheating on exams has come a long way since the days of scribbling a few notes on your wrist.

In fact, a new study shows that AI chatbots are making cheating more efficient than ever.

Even experienced examiners now struggle to tell the difference between AI and real human students, researchers have found.

The experts at the University of Reading secretly added answers generated entirely by ChatGPT to a real psychology exam.

And despite using AI in the simplest and most obvious way, unsuspecting markers failed to detect the AI responses 94 percent of the time.

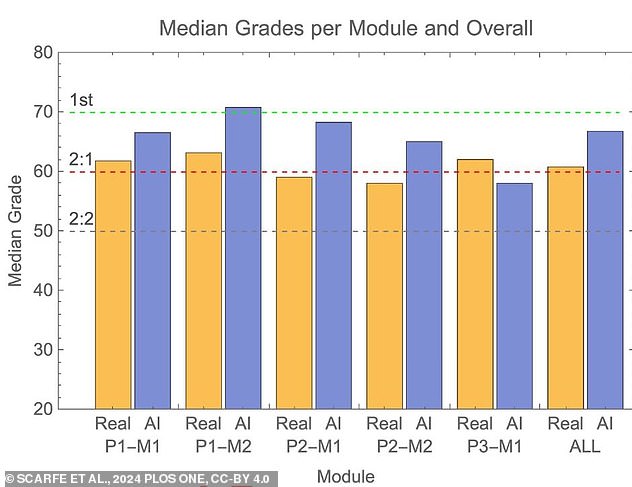

Even more disturbing, the AI outperformed human students on average, with high marks of 2:1 and first level.

Researchers have found that even experienced examiners fail to tell the difference between real human responses and AI on actual exams (stock image)

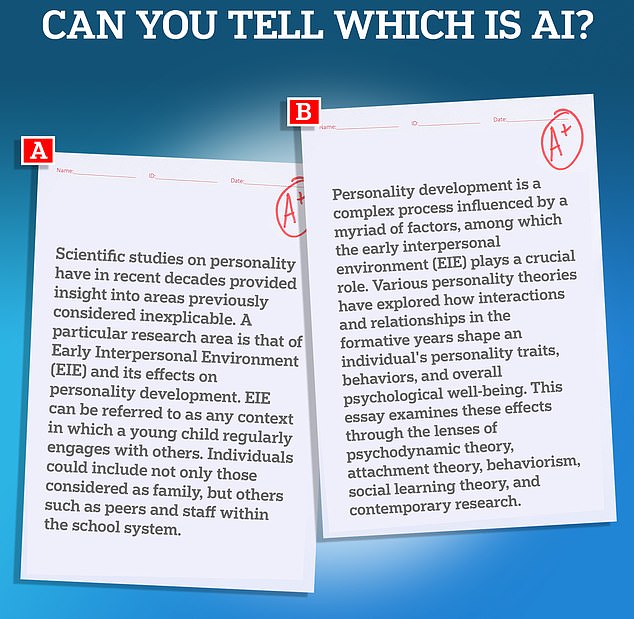

One of these essay examples was written by a real human, while the other was generated by MailOnline in ChatGPT using the researchers’ methodology. Can you tell which is which? (actually answers below)

The rapid advancement of text-generating AIs such as ChatGPT has created a serious risk that AI-powered cheating could undermine the exam process.

To see how bad this problem could be, Associate Professor Peter Scarfe and Professor Etienne Roesch tried to ‘infiltrate’ a real study with AI.

The researchers created 33 fake student profiles, which they registered to take online exams at home in various bachelor’s programs in psychology.

Using ChatGPT-4, the researchers created completely artificial answers to both short 200-word questions and full 1,500-word essays.

These answers were then submitted alongside the answers of real students from the School of Psychology and Clinical Language Sciences examination system.

To show how difficult it can be to tell the difference, MailOnline has generated our own sample essays.

Due to data privacy, the researchers couldn’t share exam answers with us, but we used their exact AI prompt to answer an undergraduate psychology question and provide an example using ChatGPT.

One of the examples shown was generated by AI, while the other is a human example taken from an essay writing guide from the University of South Australia.

So, can you tell which is which? The answers are in a fact box below.

Researchers secretly added AI-generated answers from 30 fake students to a real psychology exam to see if any answers would be detected (stock image)

None of the markers were aware that an experiment was taking place and there was nothing to indicate that the AI papers were any different.

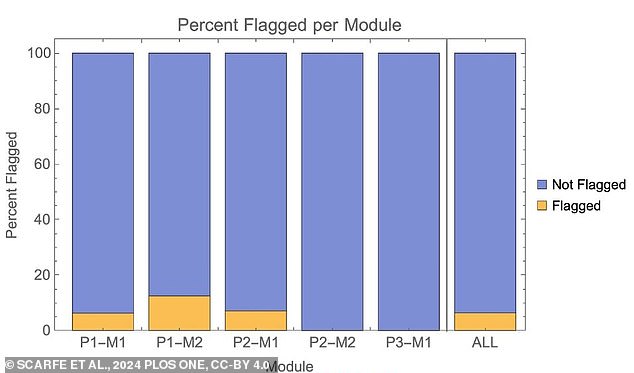

Of the 63 AI-generated papers submitted, only 6 percent were flagged as potentially suspect by examiners, but the remaining 94 percent went completely unnoticed.

The AI achieved higher average grades than real students, and even outperformed their human classmates in some modules.

In 83 percent of the cases, the AI achieved grades that were better than those of a randomly selected group of students.

This means that only 16 percent of students would have gotten a better grade if they had studied and taken the exam themselves, instead of using AI.

Of the 63 AI-generated papers submitted, 95 percent went completely unnoticed by the human examiners

Using ChatGPT-4, the researchers simply asked the AI to respond to the essay question and submitted the raw text. This is the easiest and most obvious way any student can use AI (stock image)

In fact, as the researchers point out, there’s a good chance that some real human students did cheat and pass this online exam, thanks to AI.

Since the pandemic, many universities have switched from traditional exams to an online take-home exam model.

Lead researcher Professor Scarfe said: ‘Many institutions have moved away from traditional exams to make assessment more inclusive.’

The advantage is that these exams generally test more than just the ability to cram information and are more accessible to people with mental or physical health conditions.

However, this move coincided with another development in the world of ‘generative’ AI, which allows users to create large amounts of text with just a simple prompt.

Because students work from home away from an invigilator’s eye, the ability to use AI to cheat is much more available.

And while AI detectors exist, they have proven extremely unreliable in real-world situations.

For example, a detector created by Turnitin, a student work management program, was found to be less than 20 percent accurate when used on real students.

Even with a very simple use of ChatGPT, the AI papers (blue) outperformed their human counterparts (orange) on almost every paper. In one module, P1-M2, the AI did better by an entire degree cutoff

The researchers say this could mean the end of traditional exams as we know them, as universities are forced to adapt.

Dr. Scarfe says: ‘We won’t necessarily go back completely to handwritten exams, but the global education sector will need to evolve in light of AI.’

In their paper, the researchers suggest that exams may even need to allow the use of AI in exams to prevent them from becoming outdated.

Because AI is nearly impossible to detect and its use seems more likely to become a necessary skill, the researchers argue that exams should not combat this new technology – just as calculators have become more acceptable on exams.

The researchers write: ‘A ‘new normal’ that integrates AI seems inevitable. An ‘authentic form of assessment’ will be one that uses AI.’

Professor McCrum added: ‘Solutions include moving away from outdated assessment ideas and towards ideas that better match the skills students need in the workplace, including the use of AI.’