British Bulldog! Boston Dynamics’ creepy robot dog can now talk in an English accent thanks to ChatGPT

>

As if walking a robotic dog wasn’t weird enough, the Boston Dynamics Spot can now take visitors for a walk, acting as an English tour guide.

In a new video, the engineering company demonstrated Spot’s new ability to answer questions and tell jokes using a range of dialects, in addition to several distinct personalities.

The robot, decorated with beanie hats and googly eyes, leads guests to different locations and describes what it sees.

Spot opens and closes its grasper to mimic a mouth and turns to “look” at people, and Spot’s performance is impressively close to that of a real guide.

Powered by ChatGPT, Spot’s creators say they were surprised by some of the unexpected responses the robot dog gave.

Spot the robot dog now has the ability to play tour guide, using ChatGPT to answer questions and describe the world around him

The footage shows the $75,000 (£61,857) robot adopting the persona of a ‘luxury butler’, saying: ‘My language has been meticulously designed to provide an authentic British experience.’

When lead software engineer Matt Klingensmith asked him if he enjoyed his job, the robot replied: “Oh, Mr. Matt, my work as a tour guide provides great satisfaction.”

“I find spreading knowledge rather rewarding, wouldn’t you agree?”

However, this is not the only character the robot dog plays, as she takes on the voices and characteristics of a “precious metal cowgirl”, an “enthusiastic tour guide”, or a “nature documentary”.

The robot also displays a remarkable ability to recognize and respond to objects in the world.

Assuming the role of a “1920s archaeologist,” Spot refers to the camera crew, calling them “a fellow explorer with a camera.”

One of the most surprising characters is “Josh,” a sarcastic and moody robot guide, which Mr. Klingensmith says is “an experience I have never had with a robot before in my entire life.”

When asked for a haiku poem about the room, Josh’s character responds: “The generator hums lowly in a joyless room, just like my soul, Matt.”

However, all these various functions and characters are the product of simple modifications to the same code.

Using different prompts, the large language model behind Spot can create a whole range of characters from “Fancy Butler” to “Josh.”

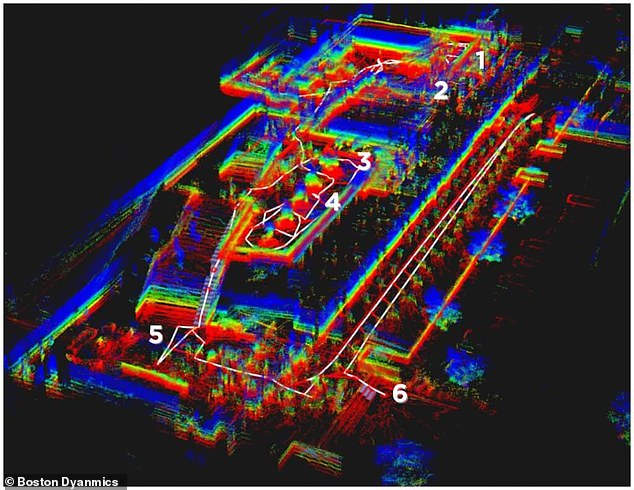

Boston Dynamics provides the AI with this office map, including some labeled locations and some brief descriptions

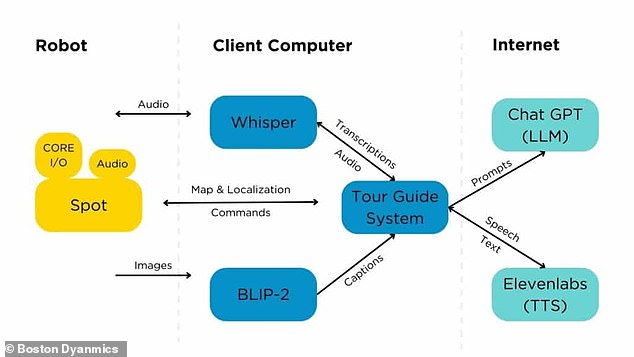

The team provided ChatGPT with a carefully designed prompt that converts visual and auditory information into speech and commands for the bot.

The prompts include information such as “Use the tour guide API to guide guests through the building using a bot.” Tell guests what you see, and compose interesting stories about it.

They add context like: “Character: “You’re a sarcastic, unhelpful robot.”

The model is then provided with a map of the building, including some labeled rooms and brief descriptions.

However, the AI is given only enough information to get started.

“LLM can be considered an enhanced actor – we offer a broad text and LLM quickly fills in the blanks,” say its creators.

To convert visual information into text that ChatGPT can use, Spot uses a program called Visual Question Answering Model that annotates images from the robot’s cameras.

With all this information, ChatGPT 4 can command the robot to move around its environment, respond to questions, and comment on its surroundings.

Some of the behaviors that Spot was able to produce with ChatGPT at its core surprised even its creators.

Josh, the sarcastic tour guide, was one of the most surprising characters and a completely different experience than most previous human-robot interactions.

This diagram shows how real-world information is transformed into prompts that AI can use to control a robot’s speech and movement

In a blog post, Mr. Klingensmith and his team noted that large language models (LLMs) like ChatGPT tend to produce “emergent behavior” that the code does not predict.

In one example, the team asked Spot about his parents, and the bot led them to show Spot’s older models, saying those were the “oldest.”

Likewise, when asked “Who is Mark Raibert?” “I don’t know,” the robot replied. Let’s go to the IT help desk and ask.

Neither the parents’ notions nor the command to ask for help were programmed, although the team insists that this is not evidence that the robot is thinking.

“To be clear, these anecdotes do not suggest that the LLM is conscious or even intelligent in the human sense, but rather merely demonstrates the strength of the statistical association,” the team writes.

“But the smoke and mirrors that LLM sets up to look smart can be quite convincing,” they added.

Boston Dynamics added (1) navigation system, (2) microphone, (3) speaker, (4) gripper and camera

This isn’t the first role proposed for Spot by Boston Dynamics, as the company has outfitted the robot for a number of different purposes.

Spot engineer Zach Jakosky previously said the next big application for this technology would be creating robotic guards that could patrol industrial facilities and factories.

Jakosky points out that Spot can autonomously roam around locations collecting data using its sensors to detect problems like open doors or fire hazards.

Spot has already been deployed to inspect nuclear power plants, oil rigs, construction sites, and even monitor the ruins of Pompeii.

Other Boston Dynamics robots have also set the standard for robotic bipedal locomotion, with videos showing Atlas effortlessly jumping and performing parkour.

A recent video showed how Atlas was able to help out on construction sites as it carried bags of tools and cut scaffolding up and down.

(tags for translation) Daily Mail