Biden rolls out ‘most sweeping actions ever taken’ to control artificial intelligence that mandates safety tests so tech isn’t used to make nuclear or biological weapons… (and AI czar Kamala Harris will oversee it)

>

President Joe Biden has unveiled the most sweeping measures ever taken to control artificial intelligence to ensure the technology cannot be weaponized.

The order, unveiled Monday, will require developers such as Microsoft, OpenAI and Google to conduct safety tests and provide results before releasing models to the public.

These findings will be analyzed by federal agencies, including the Department of Homeland Security, to address threats to critical infrastructure, chemical, biological, radiological, nuclear, and cybersecurity risks.

Biden believes the government was late to address the dangers of social media, and now young Americans are grappling with related mental health issues.

Monday’s executive order is an “urgent” step to rein in the technology before it falsely distorts basic notions of truth, deepens racial and social inequalities, provides a tool for fraudsters and criminals and is used for war.

President Joe Biden unveiled the most sweeping measures ever taken to control artificial intelligence to date to ensure the technology cannot be weaponized.

Nearly 15 leading companies have agreed to implement voluntary commitments related to AI safety, but the executive order aims to provide concrete regulation for the development of the technology.

Several companies, including Microsoft and OpenAI, also testified before Congress, where they were questioned about the safety of their chatbots.

Vice President Kamala Harris — who has the lowest approval rating of any vice president — was named “AI czar” in May, tasked with leading the charge on the AI race amid growing concerns that the technology could upend life as we know it.

However, Harris has remained quiet in the role since his appointment.

She is scheduled to speak at the UK AI Safety Summit on November 2.

Reflects the new system Government efforts to shape how AI develops in a way that can maximize its potential and contain its risks.

Artificial intelligence has been a source of deep personal interest for Biden, with its potential to impact the economy and national security.

The order, unveiled Monday, will require developers such as Microsoft, OpenAI — the creator of ChatGPT — and Google to conduct safety tests and submit results before releasing models to the public.

Deepfakes are sharing misinformation, AI bots are scamming people out of money, and chatbots are showing signs of bias.

Last May, Congress grilled OpenAI founder Sam Altman for five hours about how ChatGPT and other models are reshaping “human history” for better or worse, likening it to either the printing press or the atomic bomb.

White House Chief of Staff Jeff Zients indicated that Biden gave his team directions to act urgently on this issue, considering technology a top priority.

Zients said the Democratic president told him, “We cannot move at a normal government pace.” “We have to move as quickly, if not faster than the technology itself.”

AI companies conduct their own tests to weed out misinformation, bias, or racism.

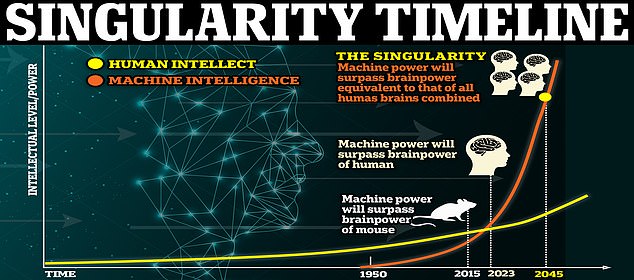

Fears about artificial intelligence come at a time when experts expect that it will achieve singularity by 2045, which is the time when technology surpasses human intelligence that we cannot control.

Using the Defense Production Act, it would require leading AI developers to share safety test results and other information with the government.

According to the Defense Act, Monday’s order will require companies developing artificial intelligence to notify the federal government if models show signs of risk to national security, public health and safety.

The National Institute of Standards and Technology will develop standards to ensure the safety of AI tools before public release.

The Department of Commerce will issue guidance on labeling and watermarking AI-generated content to help distinguish between original and software-generated interactions.

The document states that one of the points in the order is to “protect against the risks of using artificial intelligence to engineer hazardous biological materials,” which entails introducing “robust new standards for biosynthesis screening.”

The order also addresses privacy, civil rights, consumer protection, scientific research, and workers’ rights.

An administration official who previewed the order on a Sunday call with reporters said task lists within the order would be implemented and met over 90 days to 365 days, with safety and security elements facing earlier deadlines.

While much of it is about the risks of developing artificial intelligence, Biden recognizes the technology’s potential to benefit the public by making products better, cheaper, and more widely available.

This has been demonstrated by the development of affordable, life-saving medications, and the executive order states that “the Department of Health and Human Services will also establish a safety program to receive reports of harm or unsafe health care practices and act to remedy them.” Involves artificial intelligence.

“Artificial intelligence is everywhere in our lives. It will be more widespread,” Zients said.

“I think this is an important part of making our country a better place and making our lives better… At the same time, we have to avoid the negative aspects.”

Although Congress is still in the early stages of discussing AI safeguards, Biden’s order highlights an American perspective as countries around the world race to set their own guidelines.

After more than two years of deliberations, the European Union is finalizing a comprehensive set of regulations targeting the most risky applications of this technology. China, the United States’ main competitor in the field of artificial intelligence, has also set some rules.

British Prime Minister Rishi Sunak is also hoping for a prominent role for Britain as a safe hub for artificial intelligence at the summit, which Vice President Kamala Harris plans to attend this week.

(Tags for translation)dailymail