AWS offers more flexible access to Nvidia GPUs for short-duration AI workloads

AWS, an already popular cloud computing service for developers looking to access the best performing hardware for AI workloads, has announced a more flexible schedule for shorter-term requirements.

Amazon Elastic Compute Cloud (EC2) Capacity Blocks for ML is what Amazon calls an industry first, giving customers access to GPUs on a consumption-based model.

The Seattle-based cloud giant hopes that more affordable options will provide smaller organizations with more opportunities, creating a more diverse landscape.

AWS launches short-term consumption-based GPU rentals

In a press releasesaid the company: “EC2 Capacity Blocks allows customers to reserve hundreds of Nvidia GPUs co-located in Amazon EC2 UltraClusters, designed for high-performance ML workloads.”

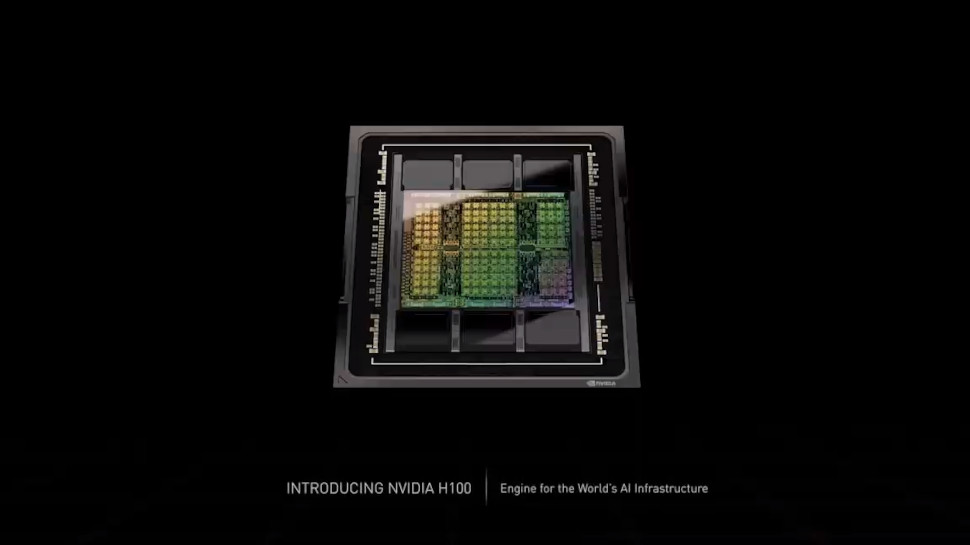

Customers can access the latest Nvidia H100 Tensor Core GPUs, suitable for training basic and large language models, by specifying cluster size and duration, meaning they only pay for what they need.

Amazon noted that demand for GPUs is quickly outpacing supply as more companies come to grips with generative AI, and many will find themselves either having to pay for excessive service or having GPUs idle when not in use – or worse , both.

AWS users can reserve EC2 UltraClusters of P5 instances between 1-14 days and up to eight weeks in advance. They can choose from flexible cluster size options ranging from 1-64 instances or up to 512 GPUs.

AWS Compute and Networking VP David Brown commented: “With Amazon EC2 Capacity Blocks, we are adding a new way for enterprises and startups to predictably acquire Nvidia GPU capacity to build, train and deploy their generative AI applications – without spending capital on to earn in the long term. obligations. It’s one of the latest ways AWS is innovating to broaden access to generative AI capabilities.”

Pricing for the service can be found on the AWS website, where potential users can also sign up to use the affordable short-term option.