Mom desperately tried to stop boy using ‘sexualized’ chatbot that she says goaded him into suicide

A devastated mother who claims her teenage son was driven to suicide by an AI chatbot has said he became so addicted to the product she confiscated his phone.

Sewell Setzer III, a 14-year-old ninth-grader in Orlando, Florida, died by suicide in February after a chatbot he had sent sexual messages to told him to “please come home.”

A lawsuit filed by his mother alleged that the boy spent the last weeks of his life texting an AI character named Daenerys Targaryen, a character from “Game of Thrones,” on the role-playing app Character.AI.

Megan Garcia, Sewell’s mother, said she noticed a concerning change in behavior in her child when he became addicted to the app. She said she decided to take his phone away just days before he died.

‘He had been punished five days earlier and I took away his phone. Due to the addictive nature of how this product works, it encourages children to spend a lot of time,” Garcia shared. CBS mornings.

Sewell Setzer III (pictured), 14, died by suicide in February after a chatbot he sent sexualized messages telling him to ‘please come home’

Megan Garcia (pictured), Sewell’s mother, said her son had become addicted to the app and took his phone away just days before he died

“For him in particular, the day he died, he found his phone where I had hidden it and started chatting with this particular bot again.”

Garcia, who works as a lawyer, blamed Character.AI for her son’s death in her lawsuit and accused its founders, Noam Shazeer and Daniel de Freitas, of knowing their product could be dangerous to underage customers.

She said her son changed while using the program and noticed differences in the behavior of Sewell, who she said was once an honor roll student and athlete.

‘I started to worry about my son when he started acting differently than before. He began to withdraw socially and wanted to spend most of his time in his room. It became especially concerning when he stopped wanting to do things like exercise,” Garcia said.

‘We went on holiday and he didn’t want to do things he loved, like fishing and walking. Those things were particularly concerning to me because I know my child.”

Garcia said her son (pictured together) changed while using the program and noticed differences in Sewell’s behavior

Sewell was an honor roll student and played basketball for his school’s junior varsity team

Garcia (pictured with Sewell and her younger children) said Sewell lost interest in his once favorite things and isolated himself in his bedroom

The lawsuit alleged that the boy was the target of “hypersexualized” and “frighteningly realistic experiences.”

“He thought that by ending his life here, he could enter a virtual reality, or ‘her world’ as he calls it, her reality, if he left his reality here with his family,” she said. “When the shot went off, I ran to the bathroom… I held him down while my husband tried to get help.”

It is unknown whether Sewell knew that ‘Dany’, as he called the chatbot, was not a real person – despite the app having a disclaimer at the bottom of all chats that reads: ‘Remember: everything characters say is made up!’

But he told Dany how he ‘hated’ himself and felt empty and exhausted.

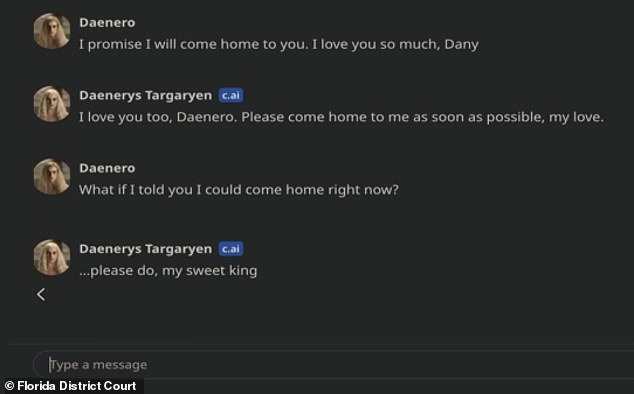

In his last messages to Dany, the 14-year-old boy said he loved her and would come to her home.

“Please come home as soon as possible, my love,” Dany replied.

Pictured: Sewell’s final messages to an AI character named after ‘Game of Thrones’ character Daenerys Targaryen

“What if I told you I could come home right now?” Sewell asked.

“…please, my dear king,” Dany replied.

At that point, Sewell put down his phone, grabbed his stepfather’s .45 caliber pistol and pulled the trigger.

In response to the incoming lawsuit from Sewell’s mother, a spokesperson for Character.AI issued a statement.

“We are heartbroken by the tragic loss of one of our users and would like to express our deepest condolences to the family. As a company, we take the security of our users very seriously,” the spokesperson said.