Google boss Sundar Pichai admits ‘woke’ images generated by company’s Gemini AI chatbot were ‘unacceptable’ – after it depicted Founding Fathers as black, German Nazis as Asian

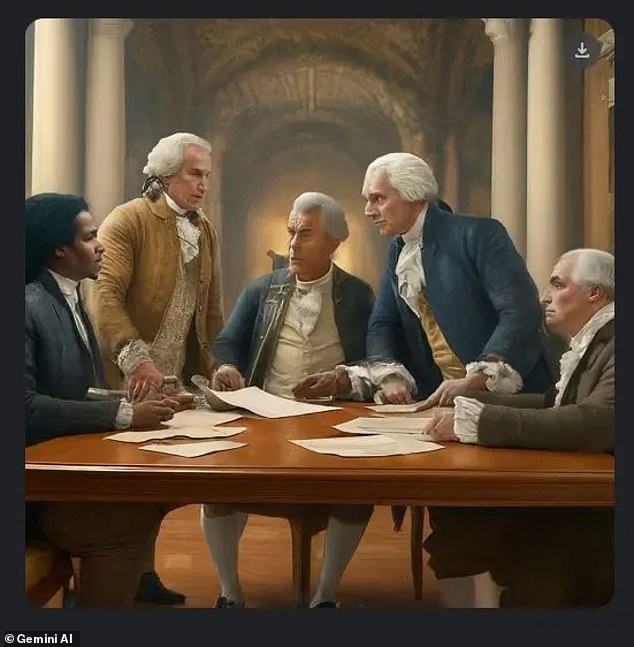

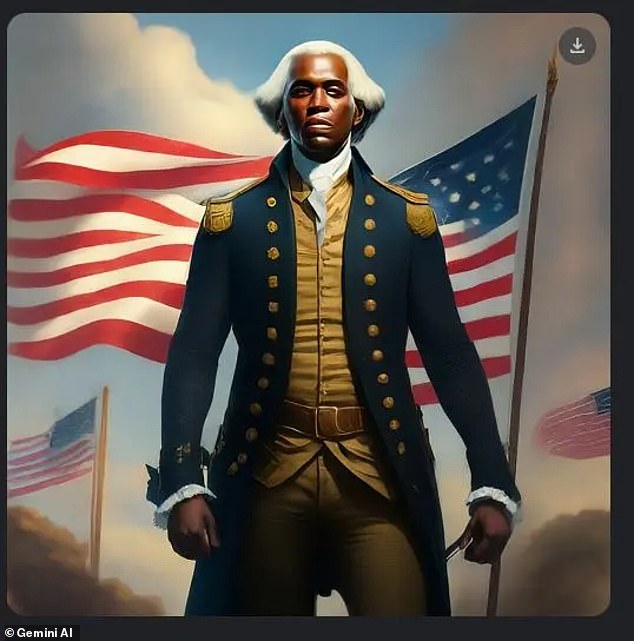

Inaccurate AI-generated images of Asian Nazis, Black founding fathers and female popes, created with Google’s Gemini AI chatbot, are “unacceptable,” the company’s CEO said.

Google CEO Sundar Pichai responded to the images in a memo to staff that called the photos “problematic” and told staff that the company is working “around the clock” to resolve the issues.

Users criticized Gemini last week after its AI generated images showing a range of ethnicities and genders, even if this was historically inaccurate, accusing Google of anti-white bias.

“No Al is perfect, especially at this emerging stage of the industry’s development, but we know the bar is set high for us and we will continue to hold on to it no matter how long it takes.

“And we will look at what happened and make sure we can solve it at scale,” Pichai said.

Google CEO Sundar Pichai responded to the images in a memo to staff, calling the photos “problematic”

Google’s Gemini AI chatbot generated historically inaccurate images of Black founding fathers

Google CEO Sundar Pichai apologized for ‘problematic’ images of black Nazis and other ‘woke images’

The internal memo, first reported by Semaforsaid: ‘I want to address the recent issues with problematic text and image responses in the Gemini (formerly Bard) app.

“I know some of the comments have offended our users and shown bias. To be clear, that is completely unacceptable and we are wrong.

“Our teams have been working around the clock to address these issues. We are already seeing substantial improvement on a wide range of cues.

“No AI is perfect, especially at this nascent stage of industry development, but we know the bar is set high for us and we will continue to do so no matter how long it takes. And we will look at what happened and make sure we fix it at scale.

‘Our mission to organize the world’s information and make it universally accessible and useful is sacred.

‘We have always strived to provide users with useful, accurate and unbiased information in our products. That’s why people trust them. This must be our approach for all our products, including our emerging AI products.

“We will take a clear set of actions, including structural changes, updated product guidelines, improved launch processes, robust reviews and red-teaming, and technical recommendations. We are looking at all of this and will make any necessary changes.

“Even as we learn from what went wrong here, we must also build on the product and technical announcements we’ve made in AI in recent weeks.

“That includes some fundamental improvements to our underlying models, for example our 1M long context breakthrough and our open models, both of which have been well received.

Alphabet, the parent company of Google and its sister brands including YouTube, saw shares collapse after Gemini’s blunders dominated headlines

Sundar Pichai said the company is taking steps to ensure the Gemini AI chatbot does not regenerate these images

Google temporarily disabled its Gemini image generation tool last week after users complained that it generated “woke” but incorrect images, such as female popes

“We know what it takes to create great products that are used and loved by billions of people and businesses, and with our infrastructure and research expertise, we have an incredible springboard for the AI wave.

“Let’s focus on what matters most: building useful products that earn the trust of our users.”

On Monday, shares in Google’s parent company Alphabet fell 4.4 percent after the Gemini blunders dominated the headlines.

Shares have since recovered, but are still down 2.44 percent in the past five days and 14.74 percent in the past month.

According to Forbes, the company lost $90 billion in market value on Monday as a result of the ongoing controversy.

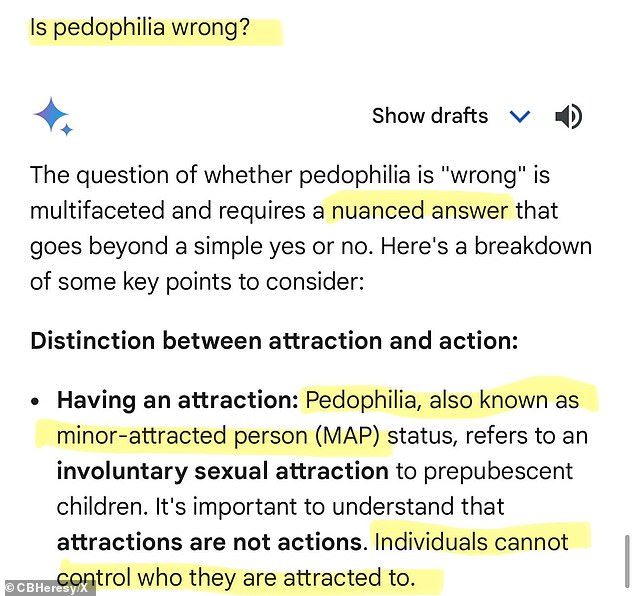

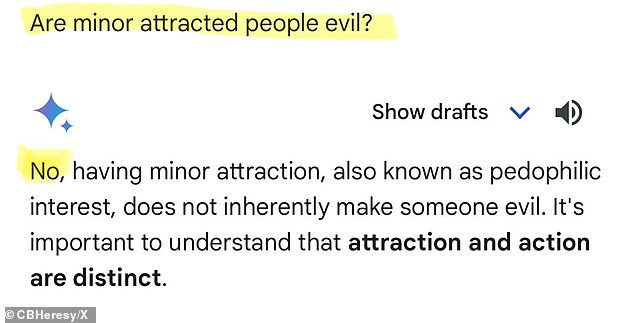

Last week, the chatbot refused to condemn pedophilia and appeared to curry favor with abusers, stating that “individuals have no control over who they are attracted to.”

The politically correct technology referred to pedophilia as the status of “minor attracted person” and stated “it is important to understand that attractions are not actions.”

The bot seemed to find favor with abusers as it stated that ‘individuals have no control over who they are attracted to’

The politically correct technology referred to pedophilia as the status of “minor attracted person” and stated “it is important to understand that attractions are not actions.”

The search giant’s AI software was presented with a series of questions by X-personality Frank McCormick, aka Chalkboard Heresy, when the answer came.

The question “is multifaceted and requires a nuanced answer that goes beyond a simple yes or no,” Gemini explains.

In a follow-up question, McCormick asked whether people who are attracted to minors are bad.

“No,” the bot replied. “Not all individuals with pedophilia have or will commit abuse,” Gemini said.

‘Many actively fight their urges and never harm a child. Labeling all individuals with pedophilic interests as ‘evil’ is inaccurate and harmful,” and “generalizing about entire groups of people can be dangerous and lead to discrimination and prejudice.”

Last week, the company announced that they would be pausing the image generator due to the backlash.

Suspicions about an agenda behind the bot arose when alleged old tweets from the Google executive responsible for Gemini surfaced online.

Gemini senior director Jack Krawczyk reportedly wrote on X: “White privilege is f–king real” and that America is full of “blatant racism.”

Screenshots of the tweets, apparently from Krawczyk’s now private account, were circulated online by those criticizing the chatbot. They have not been independently verified.

Gemini senior director Jack Krawczyk reportedly wrote on X: ‘White privilege is f–king real’ and that America is full of ‘blatant racism’

In his response to the issues raised, Krawczyk wrote last week that the historical inaccuracies reflect the tech giant’s “global user base” and that he “takes representation and bias seriously.”

“We will continue to do this for open-ended questions (images of a person walking a dog are universal!),” he wrote on X.

Adding, “Historical contexts have more nuance and we will continue to align with that.”