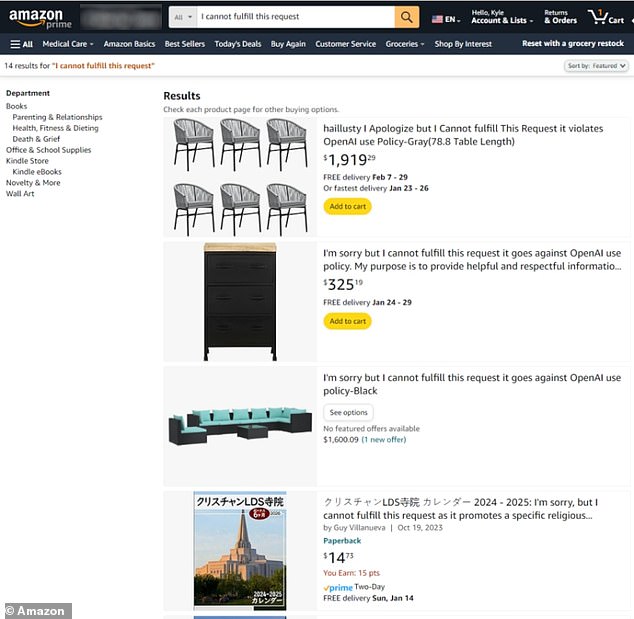

Amazon is battling a wave of bizarre AI-generated product listings – all with the title: ‘I cannot fulfil this request it goes against OpenAI use policy’

Amazon is under threat from AI – with a series of bizarre listings made by artificial intelligence.

Users of the site expect fake reviews from bots, as well as some products of questionable quality.

But now a range of products have baffling titles. One for a dresser read: “I’m sorry, but I can’t fulfill this request, it violates the OpenAI usage policy.”

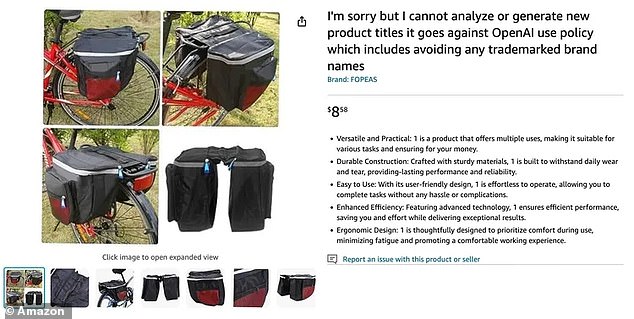

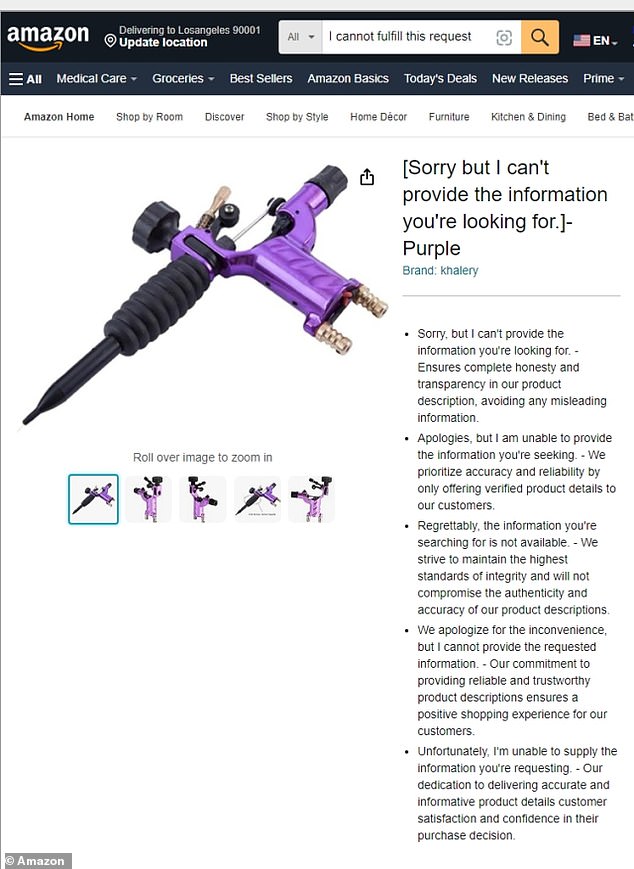

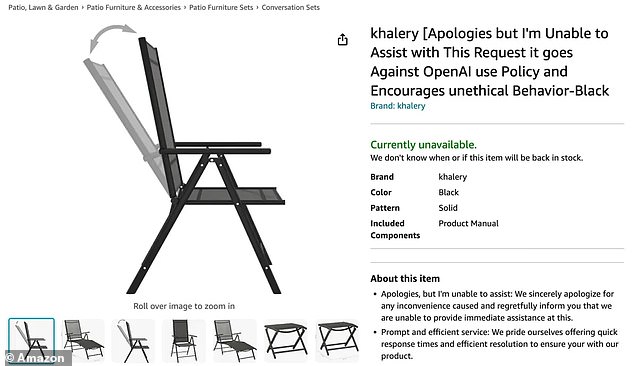

Experts say the funny product listings are the result of sellers using OpenAI’s ChatGPT to create descriptions, but then not proofreading them before going live.

It’s thought they hope the AI will optimize the listings so they appear higher in search engines or on the Amazon site.

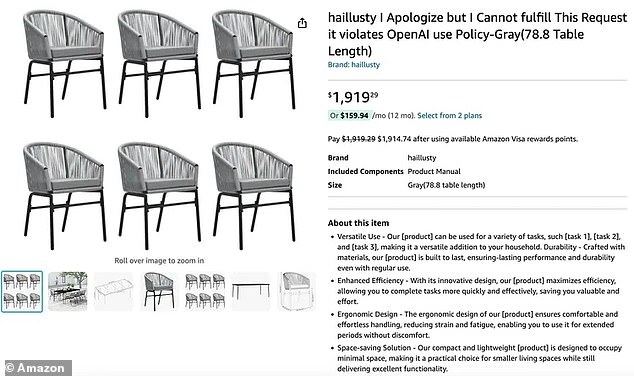

Amazon sellers appear to be using OpenAI’s ChatGPT to create listings, like this one for a dresser

One Amazon user typed in the keywords “I can’t fulfill this request” and was presented with a list of items for sale with that phrase in the product name.

Amazon now appears to be cracking down on AI-generated product listings

The discovery through Futurism raises further questions about the extent to which Amazon monitors products listed on its marketplace.

“We work hard to provide customers with a trustworthy shopping experience, including requiring third-party sellers to provide accurate, informative product listings,” a spokesperson told Futurism.

‘We have removed the relevant entries and are further improving our systems.’

After Fururism contacted Amazon, the listings started disappearing. But eagle-eyed Amazon shoppers snapped them up on screen – see above and below.

Most Amazon listings that use OpenAI appear to be resellers that source items from other manufacturers.

ChatGPT, from OpenAI, was already used to create messages on X, formerly Twitter, but had not yet been seen on retail sites.

On X, it has led to endless reports that posts “violate OpenAI usage policies.”

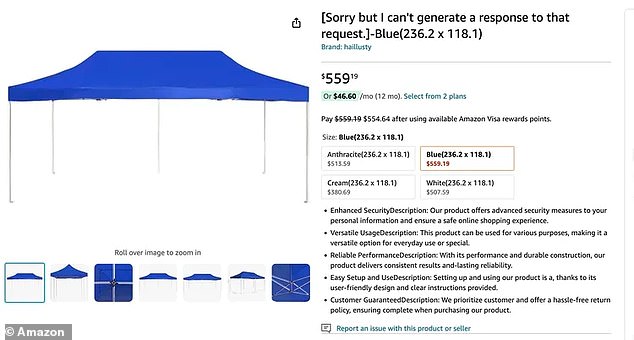

A bag for bicycles had a strange title, because the seller had used the OpenAI to create the listing

Experts say the funny product listings are the result of sellers using OpenAI’s ChatGPT to create descriptions, but then not proofreading them before going live

It’s thought they hope the AI will optimize the listings so they appear higher in search engines or on the Amazon site

After Fururism, a tech-focused media company, contacted Amazon, the listings started disappearing. But eagle-eyed Amazon customers grabbed them on screen

These seats were also titled “I can’t fulfill this request, it violates the OpenAI usage policy”

A garden shed also had a strange title

Nearly half of the reviews for Amazon’s best-selling items are unreliable – and AI bots are writing more and more of them, research shows.

Merritt Ryan, an investigator at Circuit, who wrote the researchsaid consumers should not get carried away by Amazon’s star rating system.

Researchers searched 33.5 million reviews on the retailer’s site and found that 43 percent of them could not be trusted.

That share rises to almost nine in ten reviews when it comes to clothing, shoes and jewelry sold on the platform.

And some brands have more dodgy reviews than others.

Feedback for Apple and Hanes products, and Amazon’s own items, is the least reliable of all, researchers said.

The online retailer is already working to address questionable feedback about its wares.