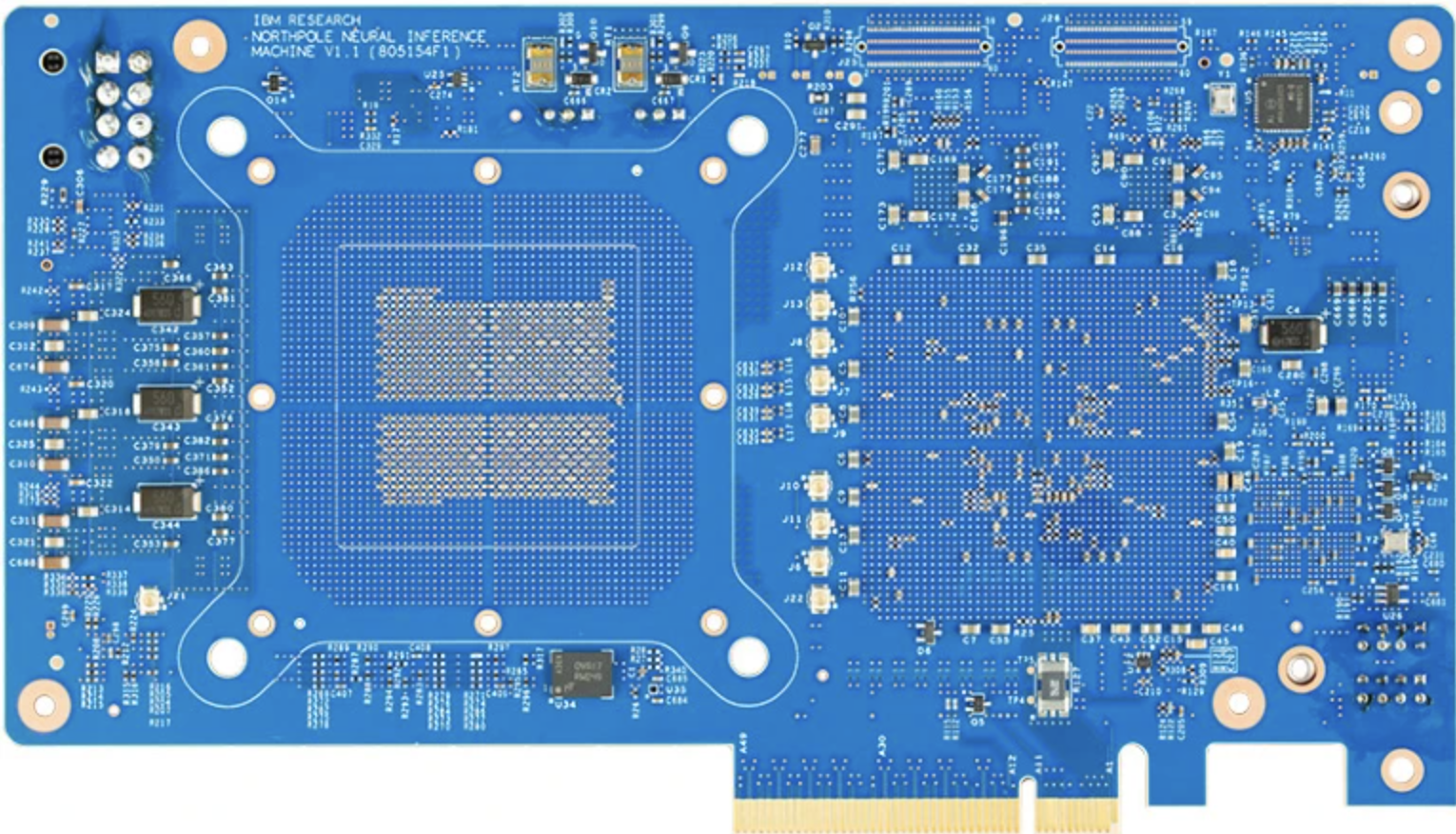

Inspired by the human brain — how IBM’s latest AI chip could be 25 times more efficient than GPUs by being more integrated — but neither Nvidia nor AMD have to worry just yet

Researchers have developed a processor built on neural networks that can perform AI tasks much faster than conventional chips by eliminating the need to access external memory.

Even the best CPUs encounter data processing bottlenecks because computations must use RAM, with this shuttling of data creating inefficiencies. IBM hopes to solve the so-called Von Neumann bottleneck with its NorthPole chip Nature.

The NorthPole processor integrates a small amount of memory into each of its 256 cores, which are connected together in a manner similar to the way parts of the brain are connected to white matter. This means that the chip completely alleviates the bottleneck.

Looking for inspiration from the human brain

IBM’s NorthPole is more of a proof of concept than a fully functioning chip that can compete with the likes of AMD and Nvidia. For example, it only contains 224 MB of RAM, which isn’t nearly enough for the scale needed for AI or to run large language models (LLMs).

The chip can also simply run pre-programmed neural networks that have been trained on separate systems. But thanks to its unique architecture, the real highlight is the energy efficiency it boasts. The researchers claim that if NorthPole were made today with state-of-the-art manufacturing standards, it would be 25 times more efficient than best GPUs And best CPUs.

“The energy efficiency is simply astonishing,” said Damien Querlioz, a nanoelectronics researcher at the University of Paris-Saclay in Palaiseau, according to Nature. “The work, published in Science, shows that computers and memory can be integrated on a large scale,” he says. “I feel like the article will turn mainstream thinking in computer architecture on its head.”

It can also outperform AI systems in tasks such as image recognition. The neural network architecture means that a bottom layer records data, such as the pixels in an image, and subsequent layers begin to detect patterns that become more complex as information is passed from one layer to the next. The top layer then displays the end result, for example suggesting whether an image contains a certain object.